Questions for Cloudflare

Note: This article has earned a lot of criticism for being shallow, reacting too much to a fast preliminary analysis, being dismissive of Cloudflare’s excellent robustness track record, and being biased by hindsight. I’ll answer to all four points in turn:

- Being shallow was my intention. For readers who want a deeper analysis I suggest looking at the aws analysis I published a couple of weeks ago.

I don’t think I’m reacting too much on a fast preliminary analysis. The main thrust (the missing feedback paths) took all of 20 minutes to figure out. It should come up even in a fast preliminary analysis, before some of the remediations suggested in Cloudflare’s preliminary analysis.

When I say I expect a more thorough analysis after just a few hours, I don’t mean I expect them to have spent more effort. I mean I hoped for a method that cast a wide net early on and revealed all major deficiencies at a low resolution, leaving the details of those deficiencies to be discovered with further effort. What tends to happen otherwise, in my experience, is that the analysis team starts to lock down and focus on the initial discoveries, forgetting to broaden the analysis later. That’s why I am a proponent of making it broad from the start.

- Being dismissive of Cloudflare’s track record was not intentional. I’m sorry. They’re doing a great job! Maybe that’s precisely why I was caught off guard by the fact that they don’t mention feedback paths in their follow-up steps.

- I think the questions below are biased by hindsight only as much as any accident analysis is. To find these problems before the outage happens, I’d still suggest stpa which works both when guided by hindsight and when used early in the design phase.

That said, let’s get on to the apparently controversial stuff.

Cloudflare just had a large outage which brought down significant portions of the internet. They have written up a useful summary of the design errors that led to the outage. When something similar happened recently to aws, I wrote a detailed analysis of what went wrong with some pointers to what else might be going wrong in that process.

Today, I’m not going to model in such detail, but there are some questions raised by a system-theoretic model of the system which I did not find the answers to in accident summary Cloudflare published, and which I would like to know the answers to if I were to put Cloudflare between me and my users.

In summary, the blog post and the fixes suggested by Cloudflare mention a lot of control paths, but very few feedback paths. This is confusing to me, because it seems like the main problems in this accident were not due to lacking control.

The initial protocol mismatch in the features file is a feedback problem (getting an overview of internal protocol conformance), and during the accident they had the necessary control actions to fix the issue: copy an older features file. The reason they couldn’t do so right away was they had no idea what was going on.

Thus, the critical two questions are

- Does the Cloudflare organisation deliberately design the human–computer interfaces used by their operators?

- Does Cloudflare actively think about how their operators can get a better understanding of the ways in which the system works, and doesn’t work?

The Cloudflare blog post suggests no.

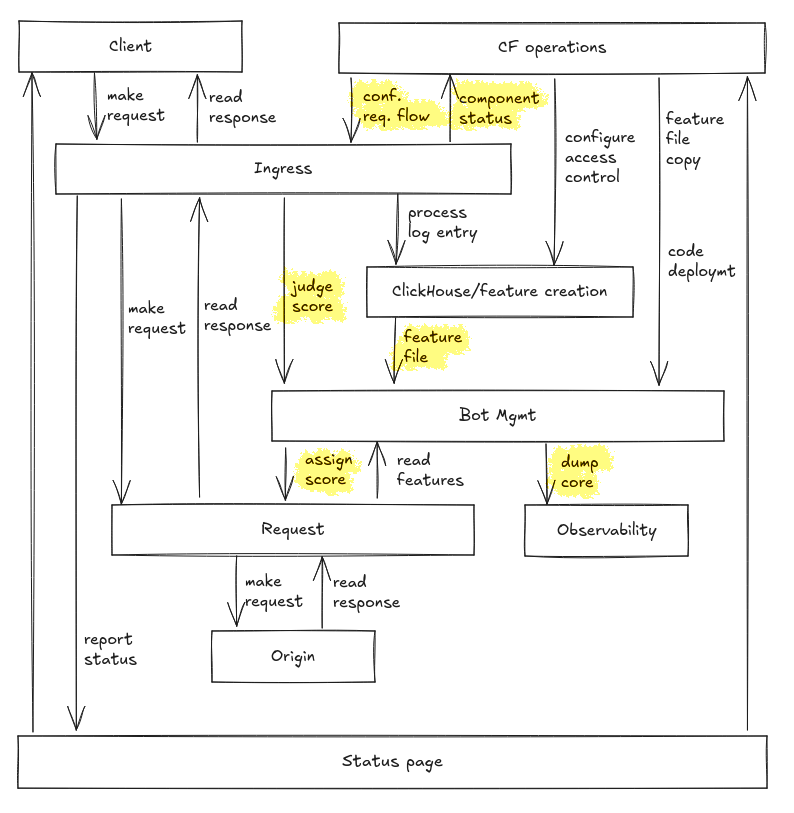

There are more questions for those interested in details. First off, this is a simplified control model as best as I can piece it together in a few minutes. We’ll focus on the highlighted control actions because they were most proximate to the accident in question.

Storming through the stpa process very sloppily, we’ll come up with several questions which are not brought up by the report (and typically missed by common accident analysis approaches based on chain of events or Swiss cheese models, such as root cause analysis and Five Whys).

- What happens if Bot Management takes too long to assign a score? Does the request by default pass on to the origin after a timeout, or is the request default denied? Is there no timeout, and Cloudflare holds the request until the client is tired of waiting?

- Depending on how Bot Management is built and how it interacts with timeouts, can it assign a score to a request that is gone from the system, i.e. has already been passed on to the origin or even produced a response back to the client? What are the effects of that?1 I have been informed that the Bot Management call happens in-line during request processing, so the request processing cannot continue until the Bot Management call either completes or is failed.

- What happens if Bot Management tries to read features from a request that is gone from the system?2 See footnote above.

- Can Ingress call for a score judgment when Bot Management is not running? What are the effects of that? What happens if Ingress thinks Bot Management did not assign a score even though it did?

- How are requests treated when there’s a problem processing them – are they passed through or rejected?

- The feature file is a protocol used to communicate between services. Is this protocol (and any other such protocols) well-specified? Are engineers working on both sides of the communication aware of that? How does Cloudflare track compliance of internal protocol implementations?

- How long can Bot Management run with an outdated features file before someone is made aware? Is there a way for Bot Management to not pick up a created features file? Will the features file generator be made aware?

- Can the feature file generator create a feature file that is not signalful of bottiness? Can Bot Management tell some of these cases apart and choose not to apply a score derived from such features? Does the feature file generator get that feedback?

- What is the process by which Cloudflare operators can reconfigure request flow, e.g. toggle out misbehaving components? But perhaps more critically, what sort of information would they be basing such decisions on?

- What is the feedback path to Cloudflare operators from the observability tools that annotate core dumps with debugging information? They consume significant resources, but are there results mostly dumped somewhere nobody looks?

- Aside from the coincidentally unavailable status page, what other pieces of misleading information did Cloudflare operators have to deal with? How can that be reduced?

I don’t know. I wish technical organisations would be more thorough in analysing their systems for these kinds of problems before they happen. Especially when they have apparently-blocking calls to exception-throwing processes in the middle of 10 % of web traffic.

I don’t expect a Cloudflare engineer to have a shower thought about this specific problem before it happens; I expect Cloudflare as an organisation to adopt processes that let them systematically find these weaknesses before they are problems. Things like stpa are right there and they work – why not use them?